Here we get to the original purpose behind the blog and the reason I started thinking about these issues in the first place. It’s also rather self-serving, since it really gets to be about my own work. (Hence the reason it’s three months late? Not really — I had thought of it as being background material and simply got distracted).

At least one aspect of my work has centered around taking otherwise-unintelligible models and trying to come up with the means of allowing humans to understand, at least approximately, what they are doing. This was the focus of my PhD research (which now seems painfully naive in retrospect, but we’ll get to some of it, anyway), but the ideas have, in fact, a considerably longer history. I recall hearing about neural networks in the early ’90s when they were in their first wave of popularity and thinking “Yes, but how do you understand what it’s doing? And is it science if you don’t?” *** These largely stayed in the background until Jerry Friedman suggested machine learning diagnostics as a useful topic to investigate (among a number of others) and I found that I had more ideas about this than about building yet more seat-of-the-pants heuristics for making prediction functions. ***

Most of this post will be a run-down of machine learning diagnostics; rather aptly described as “X-raying the Black Box”, *** although Art Owen probably more accurately likens what is done to tomography. However, in doing this, I hope to highlight the key question for all of this blog — is the effort put in to developing the tools below of any real scientific value? Or is it all simply cultural affectation that panders to our desire to feel in control of something that we really ought to let run automatically? Models and software that have some of the tools below built in to them certainly sell better, but that doesn’t mean that they’re more useful. And if they are useful for something besides making humans feel better about themselves, what is that, and can we keep it in mind when designing these tools better? It is, as I think I have foreshadowed, difficult to come up with real, practical examples to justify the time I’ve spent on the problem — although the tools I developed work quite well. I do have the beginnings of some notions about this — most of them have a huge roadblock in the form of statistical/machine learning reluctance to examine mechanistic models (see Post 6) — but we’ll need to get to what is actually done by the way of machine learning diagnostics first.

So lets look at the basic problem. We have a function F(X) where X = (x1,…,xp) is a vector and we can very cheaply obtain the value of F(X) at any X, but we can’t write down F(X) in any nice algebraic form (think of a neural network or a bagged decision tree from previous posts). What would we like to understand about F? Most of the work on this has been in terms of global models:

– Which elements of X make a difference to the value of F?

– How does F change with these elements?

(there are also local means of interpreting F — I’ll post about these later***). Most of these center around the problem of “What is the importance and the effect of x3?” Or, in more immediately understandable terms “What happens if we change x3?” Fortunately, F(X) is cheap to evaluate, so we can try this and look at

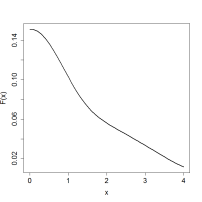

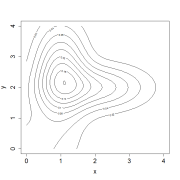

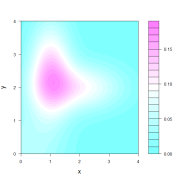

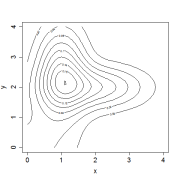

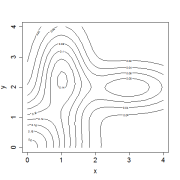

f3(z) = F(x1,x2,z,x4,…,xp) as we change z (note that this is a direct analogue of the linear regression interpretation “all other terms held constant”). This can then be represented graphically as a function of z (see the last blog post on intelligibility of visualizable relationships even when they aren’t algebraically nice). I’ve done this in the left plot below for x2 in the function from the previous post. We get a picture of what changing x does to the relationship, and if the plot is close to flat, we can decide that x3 really isn’t all that important.

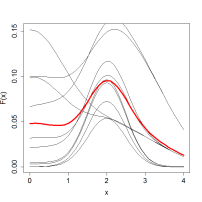

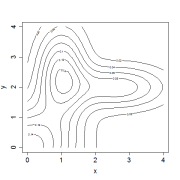

This is fairly reasonable, but presents the problem that the plot you get depends on the values of x1, x2 and all the other elements of X. The right hand plot below provides the relationship graphed at 10 other values of X.

So what to do? Well the obvious thing to do is to average. There are a bunch of ways to do this; Jerry Friedman defined the notion of partial dependence in one of his seminal papers in which you average the relationship over the values of x that appear in your data set. Leo Breiman defined a notion of variable importance in a similar manner by saying “mix up the column of x3 values (ie randomly permute these values with respect to the rest of the data set) and measure how much the value of F(X) changes.” There have been a number of variations on this theme. In the right-hand plot above I’ve approximated Jerry’s partial dependence by averaging the 10 curves to produce the thick partial dependence line.

These can all be viewed as some variation on the theme of what is called the functional ANOVA. For those of you used to thinking in rather stodgy statistical terms, fANOVA is just the logical extension of a multi-way ANOVA when you let every possible value of each xi be its own factor level (we can do this because we have a response F(X) at each factor level combination). For those of you for whom the last sentence was so much gibberish, we replace the average above by integrals. So we can define

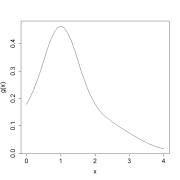

f3(z) = ∫ … ∫ F(x1,x2>/sub>,z,x4,…,xp) dx1 dx2 dx4… dxp

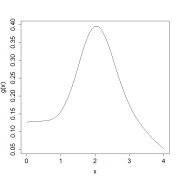

The point of this is that it also allows us to examine how much difference there is due to pairs of variables, after the “main effects” have been taken out

f2,3(z2,z3) = ∫ … ∫ F(x1,z2,z3,x4,…,xp) dx1 dx4… dxp – f2(z2) – f3(z3)

this can be extended to combinations of three variables and so on; it gives us a representation of the form

F(X) = f0 + ∑i fi(xi) + ∑i,j fi,j(xi,xz) + ….

There are lots of nice properties of this framework; most relevant here is that we can ascribe an amount of variance explained to each of these effects and we can parse out “How important is x3?” in terms of the variance due to all components that include x3. We can also plot f3(x3) as a notion of effects.

This framework was a large part of my PhD thesis (although to be fair, Charles Roosen — an earlier student of Jerry Friedman, laid a lot of the groundwork). You might think of plotting all the individual level effects, and all the pair-wise effects in a grid. If these explain most of the changes in the predictions F(X) over your input space, then you can think of F(X) as being nearly like a generalized additive model (exactly as written above, stopping at functions of two variables) in which all the terms are visually interpretable. One of the things I did was to look at combinations of three variables and ask “Are there things that this interpretation as missing, and which variables to they involve?”

Of course there is a question of “Integrate over what range?” Or alternatively with respect to what distribution. In fact, this can have a profound effect on which variables you think are important, or how well you can reconstruct F(X) based on adding up functions of one dimension. This was another concern of my thesis — particularly when the values for X that we had left “holes” in space that F(X) filled in without much guidance as to what the real relationship was. I’ll come back to some of this later.

For the moment, however, we have that almost all tools for “understanding” machine learning functions come down to representing them approximately in terms of low-dimensional visualizable components. The methods that have come with such tools have been very popular, partly because of them. However, I wonder whether they are really doing anything useful and I’ve rarely seen such visualization tools then used in genuine scientific analysis. They can be (and have been) employed to develop more algebraically tractable representations for F(X). This sometimes improves predictive performance by reducing the variance of the estimated relationship, but mostly comes down to having a relationship that humans can get their heads around, and this still doesn’t answer the question of “Why do we need to?”

*** As an aside I don’t know how common it is for academics to trace their research interests to questions and ideas far earlier than their conceptualization of their work as a discipline. In my first numerical analysis class, we at one point set up linear regression (I had no statistical training at all) and I recall thinking that I wanted to develop methods to decide if it should instead be quadratic or cubic etc. Some five years or so later I discovered statistical inference. Of course, we always pick out important sign-posts in retrospect.

*** Jerry has an amazing intuition for heuristics and the way that algorithms react to randomness — I quickly decided that competing with him was not really going to be a viable option, but still lacked the patience for long mathematical exercises; hence a penchant for the picking-up of unconsidered problems, even if they lead into Esoterika (that’s got to be a journal name) on occasion.

*** I wish this was my term — I first came across it as the heading of a NISS program.

*** Yes a do have a list of all the things I’ve said I would post about.